While listening to Ross’s thunderous snore on our 7 hour flight to Amsterdam, I decided that I might as well start working on my IM project since sleeping was already out of the question.

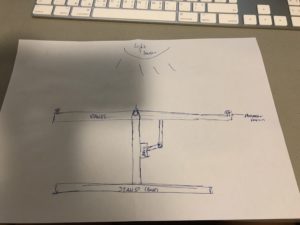

My portrait begins with a black background that sort of resembles a dark room. Once you click the switch, the “lights” turn on and the actual portrait is revealed. I added some interactions to my portrait to make things a bit more interesting.

Here’s a video demonstrating how it works:

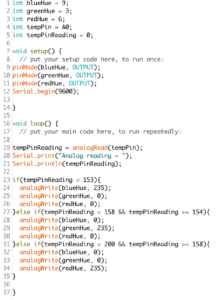

My code:

int clickedX;

int clickedY;

PImage img;

void setup(){

size(800, 800);

background(0, 0, 0);

img = loadImage(“switch.jpg”);

image(img, 300, 300, 200, 200);

}

void draw(){

if(clickedX > 300 && clickedX < 500 && clickedY > 300 && clickedY < 500){

background(255, 150, 150);

noStroke();

//face

fill(224, 172, 105);

rect(200, 150, 400, 500, 70);

//eyebrows

strokeWeight(20);

stroke(0, 0, 0);

line(250, 250, 290, 230);

line(290, 230, 380, 250);

line(420, 250, 510, 230);

line(510, 230, 550, 250);

//eyes

noStroke();

fill(255);

arc(320, 300, 100, 70, 0, 2*PI);

arc(480, 300, 100, 70, 0, 2*PI);

fill(25, 51, 0);

arc(322, 300, 60, 60, 0, 2*PI);

arc(482, 300, 60, 60, 0, 2*PI);

fill(0, 0, 0);

arc(322.5, 300, 40, 40, 0, 2*PI);

arc(482.5, 300, 40, 40, 0, 2*PI);

//sunglasses

if(mouseX > 250 && mouseX < 550 && mouseY > 250 && mouseY < 340){

noStroke();

fill(0, 0, 0);

rect(250, 255, 140, 100, 30);

rect(410, 255, 140, 100, 30);

rect(300, 280, 150, 20, 30);

rect(200, 280, 150, 20, 30);

rect(540, 280, 60, 20, 30);

}

//nose

strokeWeight(8);

stroke(25, 51, 0);

line(400, 350, 430, 450);

line(400, 450, 430, 450);

//mouth

strokeWeight(12);

stroke(139, 0, 0);

bezier(375, 550, 390, 555, 400, 560, 425, 560);

//ears

noStroke();

fill(224, 172, 105);

arc(200, 350, 80, 100, PI/2, 3*PI/2);

arc(600, 350, 80, 100, -PI/2, PI/2);

fill(25, 51, 0);

arc(200, 350, 40, 60, PI/2, 3*PI/2);

arc(600, 350, 40, 60, -PI/2, PI/2);

//hair

strokeWeight(8);

stroke(0, 0, 0);

bezier(210, 170, 213, 150, 217, 130, 240, 80);

bezier(240, 170, 243, 150, 247, 130, 270, 80);

bezier(270, 170, 273, 150, 277, 130, 300, 80);

bezier(300, 170, 303, 150, 307, 130, 330, 80);

bezier(330, 170, 333, 150, 337, 130, 360, 80);

bezier(360, 170, 363, 150, 367, 130, 390, 80);

bezier(390, 170, 393, 150, 397, 130, 420, 80);

bezier(420, 170, 423, 150, 427, 130, 450, 80);

bezier(450, 170, 453, 150, 457, 130, 480, 80);

bezier(480, 170, 483, 150, 487, 130, 510, 80);

bezier(510, 170, 513, 150, 517, 130, 540, 80);

bezier(540, 170, 543, 150, 547, 130, 570, 80);

bezier(570, 170, 573, 150, 577, 130, 600, 80);

//chin

strokeWeight(4);

stroke(25, 51, 0);

noFill();

bezier(360, 635, 375, 660, 385, 660, 400, 635);

bezier(400, 635, 415, 660, 425, 660, 440, 635);

}

//mustache

if(mouseX > 370 && mouseX < 430 && mouseY > 545 && mouseY < 565){

//noStroke();

//fill(0, 0, 0);

//rect(350, 500, 100, 20);

strokeWeight(10);

stroke(0, 0, 0);

noFill();

bezier(300, 480, 310, 505, 370, 508, 380, 510);

bezier(420, 510, 430, 508, 490, 505, 500, 480);

}

//ears

//noStroke();

//fill(224, 172, 105);

//arc(200, 350, 80, 100, PI/2, 3*PI/2);

//arc(600, 350, 80, 100, -PI/2, PI/2);

//fill(25, 51, 0);

//arc(200, 350, 40, 60, PI/2, 3*PI/2);

//arc(600, 350, 40, 60, -PI/2, PI/2);

//left airpod

if(mouseX > 120 && mouseX < 200 && mouseY > 350 && mouseY < 430){

noStroke();

fill(255, 255, 255);

rect(180, 340, 15, 70, 6);

rect(185, 340, 15, 20, 6);

rect(605, 340, 15, 70, 6);

rect(600, 340, 15, 20, 6);

}

//right airpod

if(mouseX > 600 && mouseX < 680 && mouseY > 350 && mouseY < 430){

noStroke();

fill(255, 255, 255);

rect(180, 340, 15, 70, 6);

rect(185, 340, 15, 20, 6);

rect(605, 340, 15, 70, 6);

rect(600, 340, 15, 20, 6);

}

//moving eyebrows left

if(mouseX > 250 && mouseX < 550 && mouseY > 230 && mouseY < 250){

strokeWeight(22);

stroke(224, 172, 105);

line(250, 250, 290, 230);

line(290, 230, 380, 250);

line(420, 250, 510, 230);

line(510, 230, 550, 250);

strokeWeight(20);

stroke(0, 0, 0);

line(250, 220, 290, 200);

line(290, 200, 380, 220);

line(420, 220, 510, 200);

line(510, 200, 550, 220);

}

}

void mouseClicked(){

clickedX = mouseX;

clickedY = mouseY;

}