Background

My final project was a, “DJ Mixer” where the user could use his/her own hands to control and mix two songs together to play at the same time. For example, Ed Sheerans’s shape of you background music could be played with Justin Bieber singing love yourself.

Timeline

- Used the Minim Processing library to simply play 2 songs at the same time, one kareoke and one acapella, and was working on a volume controller which looked like this initially and worked with the cursor on the computer:

2. Integrated it to the kinect using a library by Dan Shiffman and added pause buttons.

3. Added many more songs, removed pause buttons, instead the currently playing song could be hovered on to pause.

4. I then made many improvements to the previous version:

- I improved the design a bit.

- Cropped the mp3 files of the songs to go directly to the music rather than a pause or an intro as many of the youtube videos had them.

- Made the webcam visible in a small area at the bottom.

- I also made the volume be always visible to the user as it wasn’t clear to them that that functionality was possible.

5. The final update was mainly a huge UI and Design bump which I will go over in the next section. I also added a, “DJ Training” stage in order to train users on how to move the hand around the screen to select stuff. (In the video below I use the computer cursor as the pointer for demo purposes)

Technical Aspect

The base of my project was not hard to make. I am glad I went with the object oriented approach as I’m sure it saved me TONS of time as I started making many upgrades to it. I created a Song class that included the functionality of instantiating the song with its song file, displaying the song on the screen, having on hover functionality for that song, playing and pausing functions. I then made an array of Song objects in a songs array and instantiated them all. This made it trivial to add new songs. Using an object oriented approach allowed me to implement the volume controller class and add in many design improvements such as font and on hover shading easily.

The controlling of the cursor was perhaps the most important part technically as it is what gives the user the ability to interact with my project so it had to be as seamless as possible which is why I focused on it a lot. The kinect integration was simple as I got the code from Dan Shiffmans website. However, the detection of the hand was VERY buggy initially and I had to make many changes to optimize it specifically for my requirement:

- The first important thing I did was make the cursor return to a specific spot if there is no depth greater than the threshold value. This reduced some unintentional clicking.

- The second is that I optimized Dan Shiffman’s Kinect class to suit my needs by adding 2 counters: one totalArea counter which increments in the for loop and one handArea counter which also increments in the for loop but only when the depth is greater than the threshold value. I then only allowed the movement of the cursor if handArea/totalArea=0.065 as after some testing found that the hand is less than 6.5% of the total kinect screen.

- I made everything hover based, any song, pause button and volume control has its own counter which I use to make it function only when the cursor hovers over that part for some time. This dramatically reduced unintentional clicks.

Design and Interactivity

As you have seem there was a bump in the design from the 90% ready project and the 100% ready project. Let me take you through some of my decisions.

- The adding of icons was important as professor suggested, as it gave the users a clear idea of what would happen when clicked which is why I used icons for pause, and a sound icon near the volume controllers. This worked well in practice as I didn’t have to explain much to the users, they simply knew what to do.

- I added a hover shade effect when a user hovers over a song name and a color change to the pause buttons when hovered. I did this so that first time users would know that the song is indeed clickable (or pause button). It almost gives the, ‘hyperlink’ feel to the user which is familiar to them and hence serves my project better in terms of interaction.

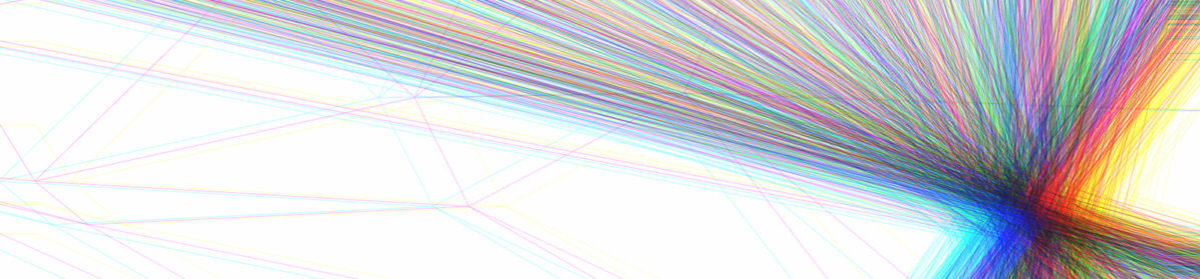

- I worked hard on the volume controller, which is why it has its own class. I wanted to design it just like a volume controller in a dj rack where the user could move a knob along a slider in order to control the volume. I did this so the user could relate what they see to a volume knob in a sound mixer in order to know what the volume controller does. The background of the volume controller initially were horizontal, equally spaced lines but I decided to make it random constantly moving lines to give it a live feel so that the user knows that something can be done by using it.

- I needed a good font as the one I selected initially wasn’t appealing to many users. I wanted a font that would be:

- Easy to read

- Had some type of old-school tech feel to it (like how text is displayed on a small LED display)

- Seemed intriguing to catch some passer-by’s attention

After some considerable research, I found a font called “underwood”, which seemed like a good choice as it fit my criteria.

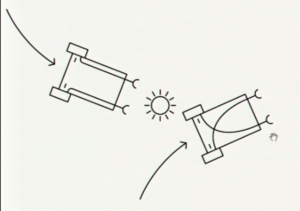

- The background image of a DJ rack that I found on google images was a nice addition as it fit my project well. The two racks seemed cohesive with the two sides (kareoke and acapella) that the user could choose songs from. Many users actually started hovering over the DJ racks themselves thinking it would do something.

The code

Main code:

import ddf.minim.*;

import ddf.minim.signals.*;

import ddf.minim.analysis.*;

import ddf.minim.effects.*;

import org.openkinect.freenect.*;

import org.openkinect.processing.*;

import processing.video.*;

KinectTracker tracker;

Kinect kinect;

Minim minim;

Movie myMovie;

AudioPlayer splatterSound;

PVector v1, v2;

float vol2, vol3;

int CurRad=30;

boolean lPaused=false;

int lCounter=0;

boolean rPaused=false;

int rCounter=0;

float pX, pY;

float maxX=0;

float maxY=0;

int kS=99;

int aS=99;

PFont sFont, bFont;

boolean debugMode=false;

PImage hand,splatter,bg,slider,djBg,bHand;

int rVolCounter=0;

int lVolCounter=0;

int rSongCounter=0;

int r2SongCounter=0;

boolean randomSongMode=false;

int stage=0;

boolean djTestPassed=false;

int djTestMarks=0;

Song[] songs = new Song[14];

float[] djHandPosX = new float[3];

float[] djHandPosY = new float[3];

float[] djCounter = new float[3];

boolean[] djCircleClicked = new boolean[3];

boolean randomHover=false;

int djTrainingFinishedCounter=0;

boolean djTrainingReached=false;

VolumeController lVolController,rVolController;

void setup() {

//size(640, 480);

fullScreen();

background(0);

kinect = new Kinect(this);

tracker = new KinectTracker();

minim = new Minim(this);

splatterSound = minim.loadFile("splatter.mp3", 1024);

sFont = createFont("underwood.ttf", 24); //cool.ttf

bFont = createFont("capture.ttf", 30);

hand=loadImage("hand.png");

bHand=loadImage("bHand.png");

splatter=loadImage("splatter.png");

slider=loadImage("slider.png");

slider.resize(50,60);

bg=loadImage("bg.jpg");

djBg=loadImage("djBg.jpg");

bg.resize(width,height);

djBg.resize(width,height);

songs[0] = new Song("k_billy.mp3", false, "billy jean", width/5, 100, 0); songs[2] = new Song("a_mnm.mp3", true, "lose yourself", width*0.55, 100, 2);

songs[1] = new Song("k_shape.mp3", false, "shape of you", width/5, 160, 1); songs[3] = new Song("a_rolling.mp3", true, "roll in the deep", width*0.55, 160, 3);

songs[5] = new Song("k_strange.mp3", false, "stranger things", width/5, 220, 5); songs[4] = new Song("a_baby.mp3", true, "baby", width*0.55, 220, 4);

songs[6] = new Song("k_beat.mp3", false, "beat", width/5, 280, 6); songs[7] = new Song("a_yodel.mp3", true, "yodel", width*0.55, 280, 7);

songs[8] = new Song("k_human.mp3", false, "human", width/5, 340, 8); songs[9] = new Song("a_love.mp3", true, "love yourself", width*0.55, 340, 9);

songs[10]= new Song("k_fancy.mp3", false, "fancy", width/5, 400, 10); songs[11]= new Song("a_closer.mp3", true, "closer", width*0.55, 400, 11);

songs[12]= new Song("k_fur_elise.mp3", false, "fur elise", width/5, 480, 10); songs[13]= new Song("a_logic.mp3", true, "logic", width*0.55, 480, 11);

kS=0;

aS=3;

songs[kS].play();

songs[aS].play();

smooth(10);

djHandPosX[0]=width/random(1,1.5); djHandPosX[1]=width/2; djHandPosX[2]=width/6;

djHandPosY[0]=height/1.5; djHandPosY[1]=height/5; djHandPosY[2]=height/1.8;

djCircleClicked[0]=false; djCircleClicked[1]=false; djCircleClicked[2]=false;

djCounter[0]=0; djCounter[1]=0; djCounter[2]=0;

lVolController= new VolumeController(width/7-50,250,50,250);

rVolController= new VolumeController(width-width/7,250,50,250);

tracker.setThreshold(435);

}

void draw() {

if(djTestPassed) {

background(bg);

tracker.track();

tracker.display();

v1 = tracker.getPos();

v2 = tracker.getLerpedPos();

pX=mouseX;pY=mouseY;

//pX=v2.x;pY=v2.y;

//pX=map(v2.x, 0, 640, 0, width); pY=map(v2.y, 0, 480, 0, height);

//pX=map(v1.x, 0, 640, 0, width); pY=map(v1.y, 0, 480, 0, height);

if (pX>maxX) {

maxX=pX;

}

if (pY>maxX) {

maxY=pY;

}

int t = tracker.getThreshold();

noStroke();

lVolController.draw(songs[kS]);

rVolController.draw(songs[aS]);

float radius;

for (Song ss : songs) {

ss.draw();

}

//HOVER CLICKING CODE

if ((kS!=99)&&(songs[kS].acapella==acapella)&&songClicked) {

songs[kS].pause();

}

if ((kS!=99)&&(songs[aS].acapella==acapella)&&songClicked) {

songs[aS].pause();

}

int indexer=0;

for (Song ss : songs) {

if ((!ss.paused)&&(!ss.clicked())) {

if (ss.acapella) {

aS=indexer;

} else {

kS=indexer;

}

}

if (ss.clicked()) {

songClicked=true;

}

indexer++;

}

int indexer2=0;

for (Song ss : songs) {

if ((ss.clicked())&&songClicked) {

ss.click();

}

}

indexer2=0;

songClicked=false;

//HOVER CLICKING CODE END

if (CurRad<30) {

CurRad=30;

}

if (pX<width/2) {

if (lPaused) {

radius = CurRad + 30 * sin( frameCount * 0.05f );

} else {

radius=CurRad;

}

fill(155, 18, 13);

} else {

if (rPaused) {

radius = CurRad + 30 * sin( frameCount * 0.05f );

} else {

radius=CurRad;

}

fill(16, 46, 117);

}

if((randomSongMode)||(randomHover)) {fill(200);} else {fill(255);}

rect(width/2-100,height/1.35,200,35);

textFont(sFont);

textAlign(CENTER);

fill(0);

text("RANDOM",width/2-100,height/1.35+8,200,35);

textFont(sFont);

imageMode(CENTER);

image(hand,pX,pY,50,50);

randomSong();

randomSongMode();

} else {

background(djBg);

tracker.track();

tracker.display();

v1 = tracker.getPos();

v2 = tracker.getLerpedPos();

pX=mouseX;pY=mouseY;

//pX=v2.x;pY=v2.y;

//pX=map(v2.x, 0, 640, 0, width); pY=map(v2.y, 0, 480, 0, height);

//pX=map(v1.x, 0, 640, 0, width); pY=map(v1.y, 0, 480, 0, height);

if (pX>maxX) {

maxX=pX;

}

if (pY>maxX) {

maxY=pY;

}

int t = tracker.getThreshold();

tracker.setThreshold(630);

textSize(55);

fill(0);

textAlign(CENTER);

if(!djTrainingReached) {

text("Place your hand on the circles for DJ Training",width/18,100,width,100);

}

djTraining();

imageMode(CENTER);

if(djTestPassed) {

image(hand,pX,pY,50,50);

} else {

image(bHand,pX,pY,50,50);

}

}

}

void keyPressed() {

int t = tracker.getThreshold();

if (key == CODED) {

if (keyCode == UP) {

t+=5;

tracker.setThreshold(t);

} else if (keyCode == DOWN) {

t-=5;

tracker.setThreshold(t);

}

fill(255);

text(t,width/2,height-100);

println(t);

}//END KINECT

if (key == 'x'||key == 'X') {

debugMode=!debugMode;

}

if (key == 'R'||key == 'r') {

randomSongMode=!randomSongMode;

}

if (key == 'd'||key == 'D') {

djTestMarks=0;

djCircleClicked[0]=false;

djCircleClicked[1]=false;

djCircleClicked[2]=false;

djTrainingReached=false;

djTrainingFinishedCounter=0;

djTestPassed=!djTestPassed;

debugMode=true;

}

}

boolean acapella;

boolean songClicked=false;

Song class:

class Song {

Minim minimThing;

AudioPlayer song;

boolean paused=true;

boolean acapella;

float x, y;

String title;

int index;

boolean clicked=false;

int clickCounter=0;

float aX=width/2;

float aY=20;

float kX=width/4;

float kY=20;

float curX=x;

float curY=y;

float prevX;

float prevY;

float defaultX;

float defaultY;

float Lx, Ly;

boolean hover=false;

Song(String fileName, boolean songType, String titleVal, float xPos, float yPos, int indexer) {

minimThing = new Minim(this);

song = minim.loadFile(fileName, 1024);

acapella=songType;

x=xPos;

y=yPos;

title=titleVal;

index=indexer;

prevX=x;

prevY=y;

defaultX=x;

defaultY=y;

}

void draw() { //boolean songType=true means acapella else kareoke

textSize(24);

textAlign(CENTER);

if (paused) {

clicked();

if(!hover) {

fill(255); } else {

fill(100+clickCounter);

}

textSize(24);

textFont(sFont);

Lx = lerp(prevX, x, 0.05);

Ly = lerp(prevY, y, 0.05);

text(title, Lx, Ly, 300, 50);

curX=x;

curY=y;

} else if (acapella) {

//textFont(bFont);

fill(0);

Lx = lerp(prevX, aX, 0.05);

Ly = lerp(prevY, aY, 0.05);

fill(255);

rect(aX, aY, 300, 50);

fill(0);

if(!hover) {fill(0);} else {fill(0,0,200);}

rect(aX+10,aY+10,10,30);

rect(aX+23,aY+10,10,30);

fill(0);

textAlign(CENTER);

text(title, Lx+15, Ly+13, 300, 50);

curX=aX;

curY=aY;

} else {

//textFont(bFont);

fill(0);

Lx = lerp(prevX, kX, 0.05);

Ly = lerp(prevY, kY, 0.05);

fill(255);

rect(kX, kY, 300, 50);

if(!hover) {fill(0);} else {fill(0,0,200);}

rect(kX+10,kY+10,10,30);

rect(kX+23,kY+10,10,30);

fill(0);

textAlign(CENTER);

text(title, Lx+40, Ly+13, 200, 50);

curX=kX;

curY=kY;

}

prevX=curX;

prevY=curY;

}

void play() {

//print(title);

if (acapella) {

if (clicked()) {

songs[aS].pause();

}

aS=index;

} else {

if (clicked()) {

songs[kS].pause();

}

kS=index;

}

song.loop();

paused=false;

}

void pause() {

song.pause();

paused=true;

}

void togglePlay() {

if (paused) {

play();

} else {

pause();

}

}

void setGain(float vol) {

song.setGain(vol);

}

boolean clicked() {

if ((pX>curX)&&(pX<curX+200)&&(pY>curY)&&(pY<curY+50)) {

hover=true;

if ((clickCounter==70)) {

CurRad=30;

boolean clicked=true;

return true;

} else {

clickCounter++;

CurRad=clickCounter;

boolean clicked=false;

}

} else {

hover=false;

CurRad=30;

clickCounter=0;

clicked=false;

return false;

}

clicked=false;

return false;

}

void click() {

togglePlay();

//background(255);

}

}

void randomSong() {

//rect(width/2-100,height/1.35,200,35);

if((pX>(width/2-100))&&(pX<(width/2+100))&&(pY>(height/1.35))&&(pY<((height/1.35))+35)) {

randomHover=true;

if((rSongCounter==25)||(((rSongCounter%125)==0)&&(rSongCounter!=0))) {

int first= int(random(0,songs.length));

int second=int(random(0,songs.length));

while((songs[first].acapella==songs[second].acapella)) {

first= int(random(0,songs.length));

second=int(random(0,songs.length));

}

songs[aS].pause();

songs[kS].pause();

songs[first].play();

songs[second].play();

}

rSongCounter++;

} else {

randomHover=false;

rSongCounter=0;

}

}

void randomSongMode() {

if(!randomSongMode) {r2SongCounter=0;} else {

if((((r2SongCounter%400)==0)&&(r2SongCounter!=0))) {

int first= int(random(0,songs.length));

int second=int(random(0,songs.length));

while((songs[first].acapella==songs[second].acapella)) {

first= int(random(0,songs.length));

second=int(random(0,songs.length));

}

songs[aS].pause();

songs[kS].pause();

songs[first].play();

songs[second].play();

}

r2SongCounter++;

}

}

Upgraded Kinect Class from Dan Schiffman:

// Daniel Shiffman

// Tracking the average location beyond a given depth threshold

// Thanks to Dan O'Sullivan

// https://github.com/shiffman/OpenKinect-for-Processing

// http://shiffman.net/p5/kinect/

class KinectTracker {

// Depth threshold

int threshold = 435;

// Raw location

PVector loc;

// Interpolated location

PVector lerpedLoc;

// Depth data

int[] depth;

// What we'll show the user

PImage display;

KinectTracker() {

// This is an awkard use of a global variable here

// But doing it this way for simplicity

kinect.initDepth();

kinect.initVideo();

kinect.enableMirror(true);

// Make a blank image

display = createImage(kinect.width, kinect.height, RGB);

// Set up the vectors

loc = new PVector(0, 0);

lerpedLoc = new PVector(0, 0);

}

void track() {

// Get the raw depth as array of integers

depth = kinect.getRawDepth();

float totalArea=0;

float handArea=0;

// Being overly cautious here

if (depth == null) return;

float sumX = 0;

float sumY = 0;

float count = 0;

for (int x = 0; x < kinect.width; x++) {

for (int y = 0; y < kinect.height; y++) {

totalArea++;

int offset = x + y*kinect.width;

// Grabbing the raw depth

int rawDepth = depth[offset];

// Testing against threshold

if (rawDepth < threshold) {

handArea++;

sumX += x;

sumY += y;

count++;

} else {

loc=new PVector(kinect.width/2,kinect.height/1.2);

}

}

}

// As long as we found something

//println(sumY);

//println((handArea/totalArea));

if ((count != 0)&&((handArea/totalArea)<0.065)) { //if ((count != 0)&&(sumX<8000000)&&(sumX>150000)&&(sumY<6000000)&&(sumY>1000)) {

loc = new PVector(sumX/count, sumY/count);

}

// Interpolating the location, doing it arbitrarily for now

lerpedLoc.x = PApplet.lerp(lerpedLoc.x, loc.x, 0.3f);

lerpedLoc.y = PApplet.lerp(lerpedLoc.y, loc.y, 0.3f);

}

PVector getLerpedPos() {

return lerpedLoc;

}

PVector getPos() {

return loc;

}

void display() {

PImage img = kinect.getDepthImage();

PImage webcam = kinect.getVideoImage();

// Being overly cautious here

if (depth == null || img == null) return;

// Going to rewrite the depth image to show which pixels are in threshold

// A lot of this is redundant, but this is just for demonstration purposes

display.loadPixels();

for (int x = 0; x < kinect.width; x++) {

for (int y = 0; y < kinect.height; y++) {

int offset = x + y * kinect.width;

// Raw depth

int rawDepth = depth[offset];

int pix = x + y * display.width;

if (rawDepth < threshold) {

// A red color instead

display.pixels[pix] = color(255, 10, 10);

} else {

//display.pixels[pix] = color(255);

display.pixels[pix] = img.pixels[offset];

}

}

}

display.updatePixels();

// Draw the image

if(debugMode) {

image(display,width/2, height*0.75,width/4,height/4); //display/webcam

}

}

int getThreshold() {

return threshold;

}

void setThreshold(int t) {

threshold = t;

}

}

Volume Controller Class

class VolumeController {

int x,y;

int lVolCounter=0;

int w;

int h;

int padding=50;

float volume=0;

PImage audioImage;

float defaultVolume=0;

VolumeController(int xPosition, int yPosition, int dWidth,int dHeight) {

x=xPosition;

y=yPosition;

w=dWidth;

h=dHeight;

audioImage=loadImage("audio.png");

audioImage.resize(25,25);

}

void draw(Song curSong) {

float displayVol=map(volume,-30,0,0,h);

float pos=(y+h)-displayVol;

if(((pX>x-padding)&&(pX<x+w+padding)&&(pY>y-padding)&&(pY<y+h+padding))) {

// if(((pX>x-padding)&&(pX<x+w+padding)&&(pY>pos-20)&&(pY<pos+20))) {

if(lVolCounter>15) {

volume = map((y+h)-pY, 0, h, -30, 0);

displayController(curSong);

curSong.setGain(volume);

} else {

displayController(curSong);

}

lVolCounter++;

} else {

lVolCounter=0;

displayController(curSong);

}

}

void displayController(Song curSong) {

image(audioImage,x+w/2,y-25);

stroke(0);

noFill();

rect(x,y,w,h);

noStroke();

for(int yp=0;yp<h;yp++){

if(curSong.paused) {

if(yp%15==0) {

fill(random(180,200));

}

}

else if(yp%2==0) {

fill(random(230,255));

} else if(yp%10==0) {

fill(random(200,220));

} else if(yp%15==0) {

fill(random(180,200));

}

rect(x+5,y+yp,w-5,1);

}

fill(0);

rect(x+w/2,y,5,h);

fill(0,0,128);

noStroke();

//rect(5, height*0.65-volDis2, 30, volDis2);

float displayVol=map(volume,-30,0,0,h);

float pos=(y+h)-displayVol;

if(pos>(y+h)-20) {pos=y+h-20;} else if(pos<(y)){pos=y;}

rect(x,pos, w, 20);

}

}

DJ Training function:

void djTraining() {

boolean playSound=false;

for(int x=0;x<(djHandPosX.length);x++) {

//ellipse(djHandPosX[x],djHandPosY[x],40,40);

if((pX>djHandPosX[x]-25)&&(pX<djHandPosX[x]+25)&&(pY>djHandPosY[x]-25)&&(pY<djHandPosY[x]+25)) {

fill(255,0,0);

//djHandPosX[x]=9000;

//djHandPosY[x]=9000;

djCounter[x]++;

if(djCounter[x]==40) {playSound=true;}

if(djCounter[x]==55) {

djCircleClicked[x]=true;

djTestMarks++;

}

} else {

fill(50);

djCounter[x]=0

; }

if(!djCircleClicked[x]) {

noStroke();

ellipse(djHandPosX[x],djHandPosY[x],50+djCounter[x],50+djCounter[x]);

} else {

if (!splatterSound.isPlaying()) { splatterSound.rewind();

}

image(splatter,djHandPosX[x],djHandPosY[x],300,300);

}

if(playSound) {

splatterSound.play();

}}

if(djTestMarks==djHandPosX.length) {

djTrainingFinishedCounter++;

djTrainingReached=true;

debugMode=false;

fill(0);

text("Get your DJ Belts on.",width/2,height/2);

if(djTrainingFinishedCounter>120) {

djTestPassed=true;

}

}

}