Initially at a loss for what to do in the class discussion the idea of making some sort of rube-goldberg-esque ball game came up. Though it wasn’t stated at the time, some one later refered to it as inverse pinball, and that is an accurate succinct description.

My initial idea was to have a fully 3D tower like set of paths and various controls to direct the ball along these paths, as in the image below. That type of ball-block set was the primary motivating inspiration aesthetically, even as my idea itself changed.

The first and most significant change to this idea was primarily due to the complexity of making the project I originally envisioned. While technically equivalent to the updated idea, in order to give the 3D version sufficient choices and moments of interaction be be worthwhile it would need to be large, and the additional size would require additional techniques, without displaying much additional technical prowess. Instead, I decided to make the game flat and wall mounted, and replaces the choice of paths with various pitfalls.

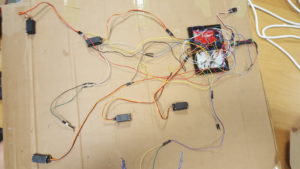

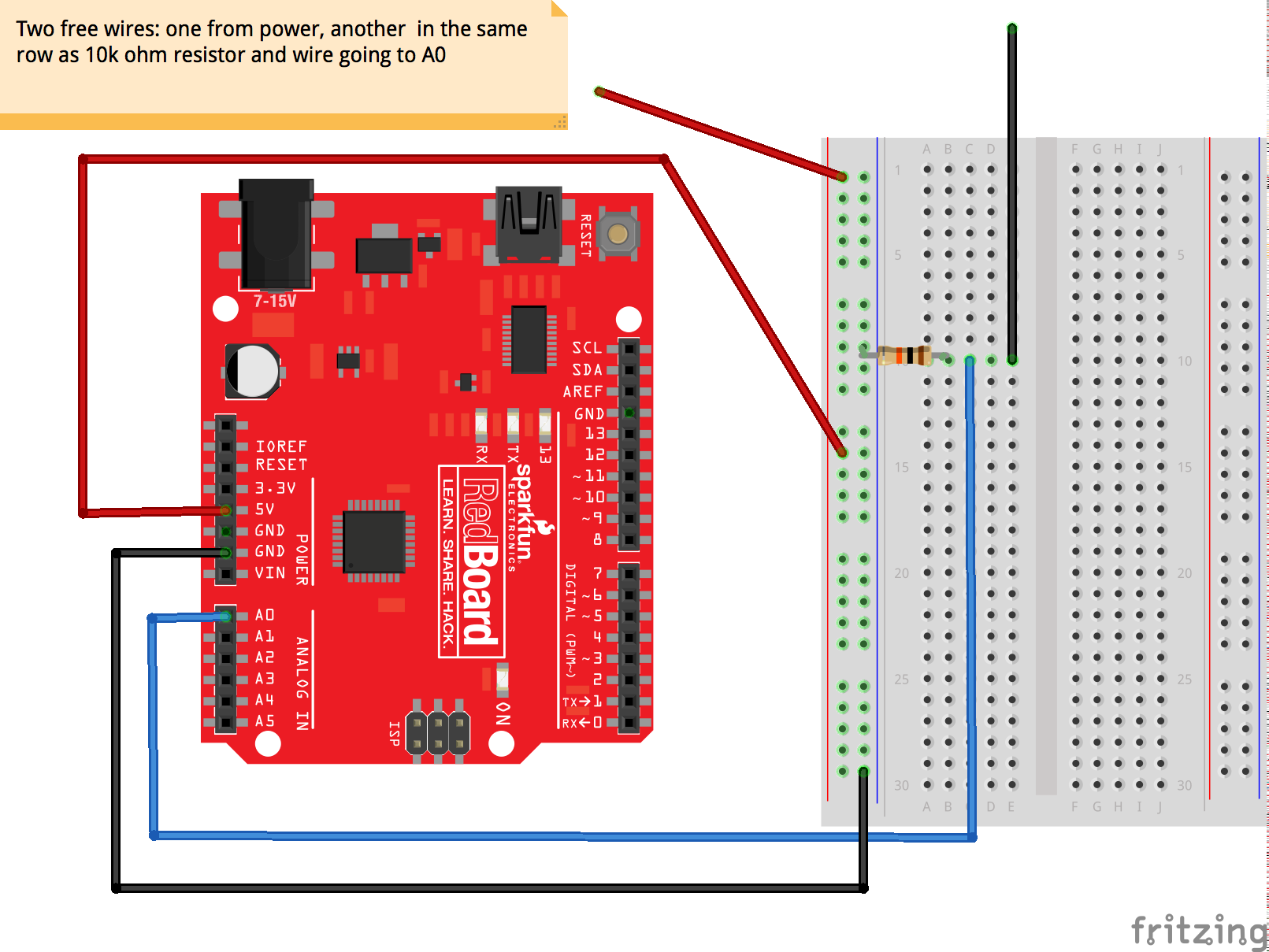

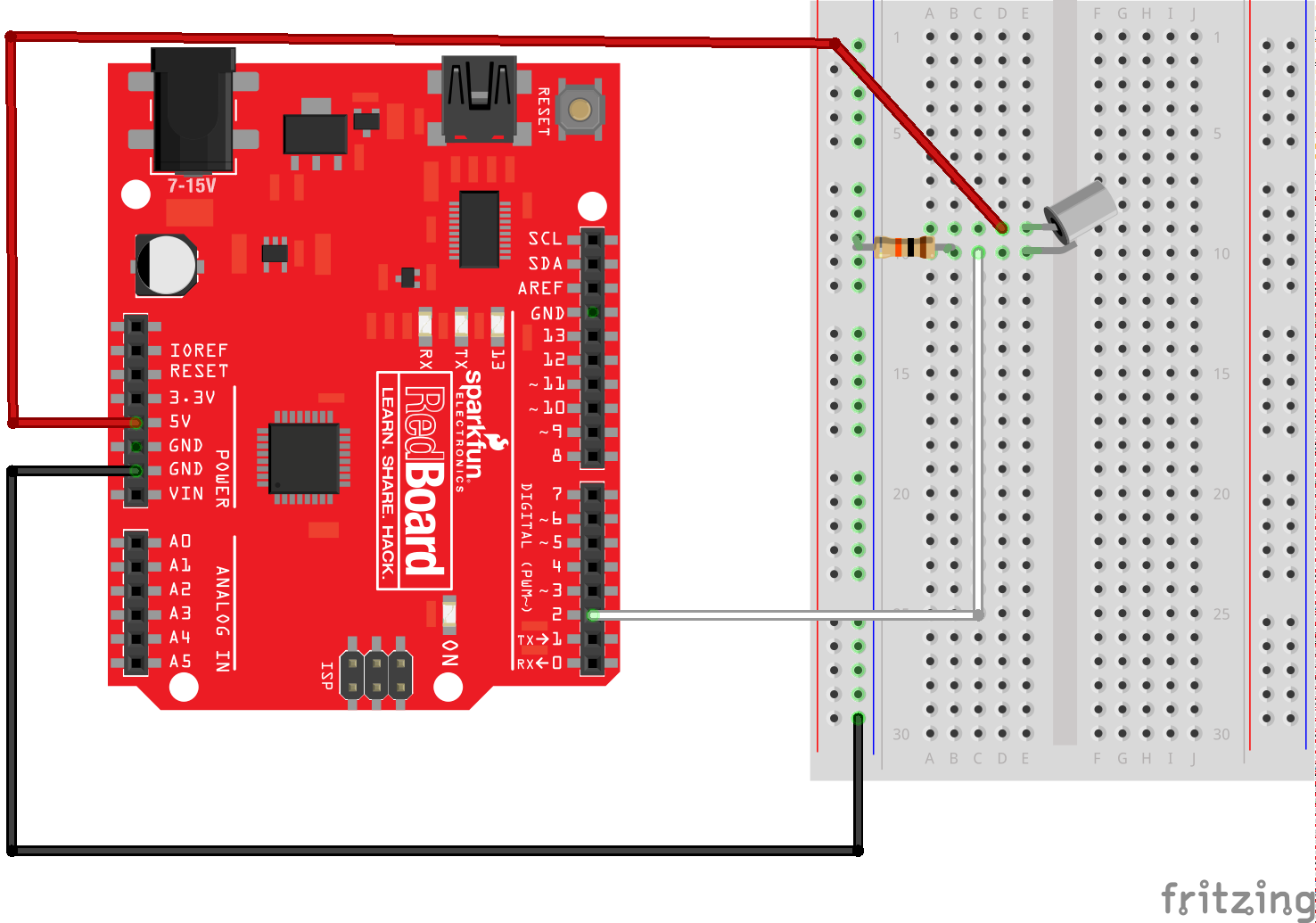

In response to this change, it made sense to use a different control mechanism. In my original concept the player would control the game using various inputs located on the game itself, many requiring physical contact. With the game now wall mounted, it no longer made sense to require the player be in such close proximity or require such precise controls. Instead, all the mechanisms are controlled by a single input, a light sensor, which is set to have a very low threshold. The following images show the game at various stages of construction, unfortunately I dissembled the electronics before collecting video documentation.

1

1

2

2

3

3

4

4

Each of the servo motors controls a flap in the path, as can be seen in picture 3. They are set so that at one of the extremes of their motion they create a smooth path, while at the other the flap is fully open. Unfortunately, during construction the cardboard lost much of its structure, and so pieces that initially would return to true now remain in the open position. In addition, there is a solenoid that if used with proper timing should propel the ball across a small gap in the path. The ball itself is a ping-pong ball wrapped in tinfoil. In the games current implementation the tinfoil serves no purpose, but mechanisms have been implemented that partially allow the result of the game (win or loss), to be displayed. The game collects this data, but does not make use of it currently.

While I am content with this project from the perspective of a proof of concept/early prototype, it is very flawed, most obviously in level design but in almost every aspect. However, I do intend on re making it, using more permanent material such as wood, implementing a scoring system, improving user interaction and accessibility, and potential adding additional features, such as markers that identify whenever the player passes each challenge. As we study using the computer to communicate with the arduino I may have additional inspiration for features, but my current visualization is intentionally minimalist.

(the following the code I used)

#include <Servo.h>

Servo startServo;

Servo servo1;

Servo servo2;

Servo servo3;

Servo servo4;

Servo servo5;

const int button= A0;

const int solenoid= 13;

int background;

boolean game=false;

int startime=0;

int lastgame=0;

boolean moving= false;

const int winning=A1;

int baseline;

void setup() {

Serial.begin(9600);

startServo.attach(3);

servo1.attach(9);

servo2.attach(11);

servo3.attach(5);

servo4.attach(6);

servo5.attach(10);

background= analogRead(button);

baseline= analogRead(winning);

startServo.write(0);

servo1.write(180);

servo2.write(0);

servo3.write(0);

servo4.write(0);

servo5.write(0);

pinMode(solenoid,OUTPUT);

//digitalWrite(solenoid,HIGH);

}

void loop() {

if(!moving){

if(!game&&activated()){

game=true;

startServo.write(180);

startime=millis();

}

else if(activated()){

moveServos();

}

if(game && millis()>(startime+10000)){

lastgame=2;

game=false;

setup();

}

if(!game){

if(lastgame==1){

displayWin();

}

else if(lastgame==2){

displayLoss();

}

}

}

if(win()){

lastgame=1;

game=false;

setup();

}

}

void displayWin(){

}

void displayLoss(){

}

boolean activated(){

return analogRead(button)<background/2;

}

boolean win(){

return analogRead(winning)>(baseline+250);

Serial.println("won!");

}

void moveServos(){

moving= true;

Serial.println("here");

servo1.write(180);

servo2.write(0);

servo3.write(0);

servo4.write(0);

servo5.write(0);

digitalWrite(solenoid,LOW);

delay(500);

servo1.write(0);

servo2.write(180);

servo3.write(180);

servo4.write(180);

servo5.write(180);

digitalWrite(solenoid,HIGH);

delay(500);

moving= false;

}

2. Creating the “drum-skin” for the rainstick

2. Creating the “drum-skin” for the rainstick